En ce mardi 28 octobre, les utilisateurs du Projet Fedora seront ravis d'apprendre la disponibilité de la version Fedora Linux 43.

Fedora Linux est une distribution communautaire développée par le projet Fedora et sponsorisée par Red Hat, qui lui fournit des développeurs ainsi que des moyens financiers et logistiques. Fedora Linux peut être vue comme une sorte de vitrine technologique pour le monde du logiciel libre, c’est pourquoi elle est prompte à inclure des nouveautés.

Expérience utilisateur

L'environnement de bureau GNOME est proposé dans sa version 49. Cette version apporte comme d'habitude de nombreux changements.

Tout d'abord l'application Showtime

remplace Totem

en guise de lecteur vidéo par défaut. Basée sur la bibliothèque GTK4 et libadwaita, elle reprend les canons esthétiques minimalistes des applications GNOME. L'interface s’efface derrière la vidéo, n'affichant que les fonctions de base essentielles.

De même l'application pour afficher les documents notamment au format PDF passe de Evince

à Papers

. De la même manière que précédemment, elle utilise la nouvelle pile logicielle plus moderne, cela permet de refaire le visuel de l'application et d'améliorer dans le même temps les performances. La signature numérique des documents est aussi mieux intégrée de même que les annotations des documents.

L'application de calendrier a eu une amélioration de son accessibilité avec un focus important sur la navigation exclusive au clavier, ce qui a nécessité une refonte de l'interface. L'interface s'adapte en fonction de la taille de l'écran avec notamment la barre latérale qui peut être masquée. Il est également possible d'exporter des événements dans un fichier ICS.

Le navigateur Web bénéficie de nombreuses améliorations comme un bloqueur de publicités plus efficace avec plus de listes de blocage disponibles. La recherche dans la page est plus complète avec la prise en compte de la casse ou de la recherche de mots entiers uniquement. L'édition des marques pages, ainsi que le menu de sécurité pour la gestion des mots de passes ou des cartes de sécurité, ont été remaniés pour être plus simples.

La boutique logicielle bénéficie d'une amélioration significative de ses performances, ce qui était son principal point faible en partie à cause du volume de données à gérer pour les différentes applications disponibles provenant des Flatpak. La boutique consomme moins de mémoire, navigue plus rapidement d'une application à une autre et effectue des recherches efficacement.

La fonctionnalité de bureau distant s'améliore avec la prise en charge des entrées multipoints pour ceux utilisant un écran tactile. De plus le curseur de souris peut fonctionner avec un positionnement relatif ce qui était nécessaire pour quelques applications ou jeux vidéo. Ceux qui le souhaitent peuvent ajouter des écrans virtuels pour une telle session même s'il n'y a pas d'écrans physiques supplémentaires.

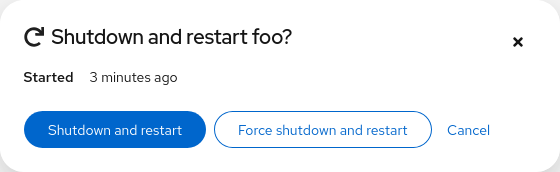

De manière plus générale, l'écran de verrouillage permet de contrôler basiquement les applications multimédia, en particulier pour la musique. Il est d'ailleurs possible d'arrêter ou de redémarrer la machine depuis cet écran de verrouillage en activant l'option idoine avec la commande gsettings set org.gnome.desktop.screensaver restart-enabled true

. Le bouton Ne pas déranger passe de la zone de notifications au menu principal de GNOME pour une meilleure cohérence de l'interface.

Enfin de nouveaux fonds d'écran sont proposés afin d'exploiter les nouvelles capacités des écrans HDR et l'espace de couleur Display P3 qui sont pris en charge sur les machines compatibles. Capacités HDR qui ont été améliorées par ailleurs avec de nouveaux paramètres pour gérer finement la luminosité de ces types d'écran.

GNOME fonctionnera uniquement en tant que session Wayland, les paquets liés à GNOME pour session X11 sont supprimés. Le projet GNOME a décalé cette décision pour sa prochaine version numérotée 50, mais Fedora maintient le cap car la qualité de ces sessions n'est pas aussi bonne que Wayland faute de tests et de maintenance de la part du projet depuis de nombreuses années. Grâce à XWayland, GNOME reste en capacité d'afficher et de gérer les applications (de plus en plus rares) reposant uniquement sur X11. Cela clôt en un sens la transition vers Wayland pour GNOME entamée il y a 9 ans déjà avec Fedora 25.

Tous les Spins disposent par défaut de la nouvelle interface web d'Anaconda. Fedora Linux 42 ne l'avait proposée que pour Fedora Workstation, en partie parce que certaines options ont dû être ajoutées pour supporter les Spins. En effet, sous GNOME, une partie de la configuration est faite après l'installation avec l'application GNOME Initial Setup. Par conséquent les spins pourront en plus configurer depuis Anaconda la date et l'heure, le compte utilisateur et le nom de la machine. De même, la configuration de la disposition clavier est gérée de manière légèrement différente. Enfin, l'interface n'est pas forcément affichée par une session de Firefox mais peut l'être via le navigateur web fourni par l'environnement en question.

Sur les machines x86_64 compatibles UEFI, Anaconda ne permettra plus d'installer Fedora si le format de partitionnement est basé sur MBR au lieu de GPT. Si la norme UEFI autorise une telle configuration, en réalité sa prise en charge correcte dépend des constructeurs et n'est pas bien testée notamment par l'équipe de Fedora ce qui rend une telle configuration peu fiable. De plus, le chargeur de démarrage GRUB2 suppose déjà qu'un système UEFI implique une table de partitions GPT. Cela a été maintenu par Fedora notamment pour permettre son utilisation dans le service cloud AWS qui ne proposait que de tels systèmes à une époque. Mais cette limitation n'a plus lieu d'être et sa suppression simplifie le test et réduit le risque de crashs au moment de l'installation. Cela ne concerne pas les autres architectures comme ARM car leur implémentation repose plus souvent sur MBR et est mieux gérée.

L'installateur Anaconda utilise le gestionnaire de paquet DNF dans sa 5e version dorénavant. Cela améliore la vitesse des opérations à l'installation de Fedora et uniformise la gestion des paquets au sein de Fedora en simplifiant aussi les tests de la distribution.

Fin de support de la modularité des paquets RPM dans l'installateur Anaconda. Les modules ont disparu dans Fedora depuis Fedora Linux 39, ce changement devenait nécessaire avec la non prise en charge des modules par DNF 5 qui est utilisé maintenant par Anaconda comme expliqué précédemment. Cela améliore aussi la maintenance d'Anaconda et de sa documentation en supprimant cette spécificité qui n'est plus utilisée.

Activation de la mise à jour automatique du système pour la variante Fedora Kinoite. Grâce au système atomique, la mise à jour se fait en arrière plan et est appliquée au prochain redémarrage. La fiabilité de l'opération avec la possibilité de revenir à l'état précédent autorise une mise à jour automatique sans trop de problèmes pour simplifier la vie de l'utilisateur et améliorer la sécurité. Les mises à jour des firmware ne sont pas concernées pour des raisons de fiabilité. La mise à jour se fait par défaut de manière hebdomadaire et une notification pour inviter à redémarrer est affichée quand c'est pertinent. Cette fonctionnalité peut être coupée et la fréquence des mises à jour reste configurable.

La police d'affichage d'émojis Noto Color Emoji utilise son nouveau format basé sur COLRv1

pour améliorer le rendu. Le rendu est meilleur car les images sont vectorielles et non sous forme d'images bitmap qui sont moins jolies quand la taille d'affichage augmente. Le tout en prenant moins d'espace disque.

Le fichier initrd utilisé lors du démarrage est compressé avec zstd

pour un démarrage plus rapide du système et une taille d'installation plus petite. Cela remplace la dépendance au binaire xz

utilisé jusqu'alors, les tests montrent une baisse de la taille d'installation de quelques méga octets pour un temps de démarrage réduit de quelques secondes en moins.

Gestion du matériel

La taille de la partition /boot

augmente de 1 Gio à 2 Gio par défaut. En effet la taille des firmwares, des initrd, du noyau, etc. ne cessent d'augmenter alors que la partition est restée à 1 Gio depuis 2016. Cela permet de réduire le risque de manquer de place dans un futur proche pour les nouveaux systèmes.

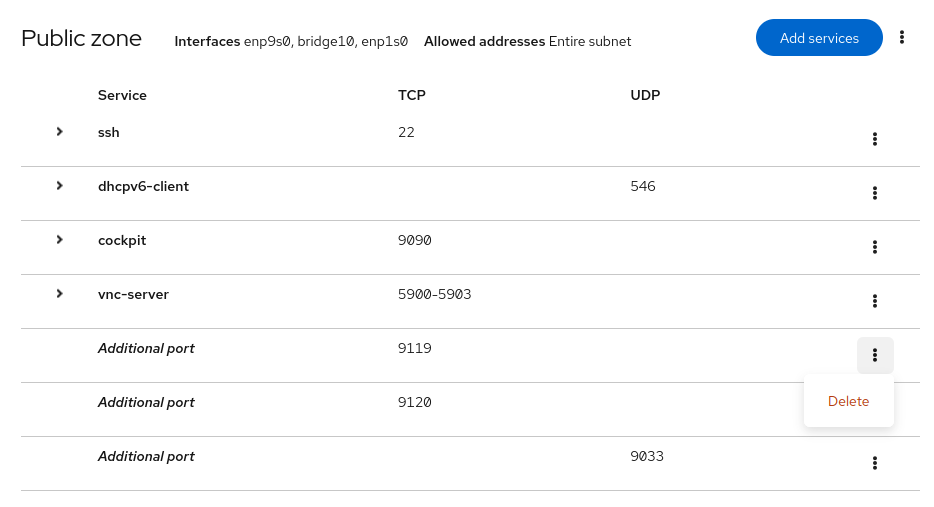

Prise en charge de la technologie Intel TDX pour exécuter des machines virtuelles avec une plus grande isolation mémoire depuis Fedora Linux. Pour les machines compatibles, cela signifie que chaque machine virtuelle tourne dans un espace mémoire dédié qui est chiffré ce qui apporte des garanties de sécurité et d'intégrité des données pour le système virtualisé.

Mise à jour de Greenboot vers la réimplémentation en Rust. L'objectif de ce composant est de fournir une vérification du système au démarrage et de redémarrer vers un état précédent du système en cas de défaut constaté ce qui est possible dans un système atomique et améliore l'expérience utilisateur en cas de mise à jour défectueuse. L'utilisateur a par ailleurs la possibilité d'ajouter ses propres tests. La précédente implémentation était en Bash et ne proposait que la prise en charge des systèmes basés sur RPM OSTree. Maintenant il peut gérer les systèmes reposant sur bootc

qui est de plus en plus employé.

Internationalisation

La police de type monospace aura une police alternative de ce type par défaut en cas de manque dans une langue donnée. Jusqu'ici une police sans-serif était utilisée par défaut ce qui n'était pas idéal car les contextes d'usage sont différents et cela pouvait donner lieu à des comportement imprévisibles suivant les polices installées sur le système. Cela concerne notamment les langues suivantes : l'arabe, l'hébreu, le thaï, le perse, l'hindi, le khmer, etc.

Administration système

Le gestionnaire de paquets RPM passe à la version 6.0 tours par minute. Mais les paquets fournis par le projet Fedora utilisent encore le format de la 4e version. Cette version se focalise sur une amélioration de sécurité, par exemple les clés OpenPGP affichent la version complète de l'id de la clé si l'empreinte numérique n'existe pas. Il est possible de fournir plusieurs signatures à un même paquet permettant d'utiliser des clés différentes suivant la provenance et les objectifs. La construction du paquet peut générer automatiquement la signature ce qui est intéressant dans le cadre de l'empaquetage local. Les clés OpenPGP version 6 sont prises en charge de même que les algorithmes dits post quantiques à base de courbes elliptiques. Il est également possible d'utiliser Sequoia-sq au lieu de GnuPG. Les paquets générés avec la 3e version ne sont cependant plus exploitables.

Réduction du nombre de règles SELinux dontaudit

liés au type unlabeled_t

. L'objectif de ces règles c'est souvent de réduire le nombre de rapports d'accès anormaux qui sont des faux positifs ou des accès bénins effectués par des applications mal écrites. Cette avalanche de notifications serait contre productive mais cacher ces accès sous le tapis ne permet pas de corriger ces comportements problématiques et complexifie le débogage. L'objectif est de désactiver seulement certaines de ces règles de la politique et non de les supprimer entièrement. Ainsi si le nouveau comportement vous pose problème, vous pouvez exécuter la commande # setsebool -P dontaudit_unlabeled_files 1

pour rétablir le comportement précédent.

Le paquet gnupg2

pour fournir l'outil de chiffrement est dorénavant découpé en plusieurs sous paquets. Cela permet de réduire la taille du système par défaut car uniquement les paquets nécessaires seront installés par défaut, laissant de côté les binaires plus optionnels. L'autre bénéfice est de simplifier le remplacement par Sequoia-PGP car il nécessite la présence de certains binaires de GnuPG mais en remplace d'autres ce qui n'était pas possible de faire avec un paquet unique. Les installations existantes installeront tous les sous paquets par défaut pour éviter de casser les systèmes existants.

Le filtre d'impression Foomatic-rip rejette les valeurs inconnues. En effet il est utilisé pour construire des commandes shell, PostScript ou Perl qui sont ensuite exécutées avant d'envoyer la tâche à l'impression via les options FoomaticRIPCommandLine

, FoomaticRIPCommandLinePDF

et FoomaticRIPOptionSettings

. Cette flexibilité permet d'écrire ou de modifier facilement le pilote de l'imprimante en question mais est source de vulnérabilités de sécurité aussi. Pour limiter les risques, par défaut aucune valeur pour ces options ne sont autorisées. L'utilisateur doit exécuter foomatic-hash

une fois pour vérifier la validité des options et s'assurer qu'elles ne font rien de problématiques et déplacer le dit fichier dans /etc/foomatic/hashes.d

pour autoriser leur utilisation. Cela ne concerne à priori que des vieux pilotes d'imprimantes et une partie de ceux fournis par CUPS ont déjà les validations nécessaires. D'autres devraient venir progressivement. Les pilotes d'imprimantes déjà installés au niveau du système seront aussi automatiquement validés après une mise à niveau vers Fedora Linux 43. À cause de la flexibilité de la méthode d'origine, corriger ou détecter tous les comportements problématiques automatiquement n'est pas possible.

Le projet 389 Directory Server ne permet plus de modifier ses bases de données BerkeleyDB. Les bases de données utilisaient par défaut LMDB depuis Fedora Linux 40 et l'abandon de BerkeleyDB se fera à priori pour Fedora Linux 45. L'objectif est de permettre la lecture des données pour les migrer vers le nouveau format et ainsi forcer les utilisateurs à effectuer la migration avant son abandon total.

Le gestionnaire de base de données PostgreSQL est mis à jour à sa 18e version. Cette version améliore les performances dans le sous-système des entrées-sorties asynchrones et dans l'optimiseur des requêtes. D'ailleurs, la migration d'une base de données à une version suivante conserve les statistiques de l'optimiseur. Une nouvelle fonction uuidv7()

permet de générer un UUID autorisant un tri basé sur un critère temporel. Par défaut les colonnes générées, celles dont le contenu est créé durant une opération de lecture, sont virtuelles et ne résident pas sur le disque. Les B-tree index multi-colonnes peuvent être utilisés plus souvent notamment s'il n'y a pas de contraintes sur les premières clés mais uniquement sur la dernière. Le protocole OAuth peut être utilisé pour s'authentifier dorénavant. Comme d'habitude, les versions 17 et 16 de ce logiciel restent disponibles dans les dépôts autorisant une mise à niveau progressive.

Le gestionnaire de base de données MySQL passe par défaut à la version 8.4. La version 8.4 était déjà proposée dans les dépôts, elle remplace juste la version 8.0 en tant que version de base. Cette dernière reste disponible via le paquet mysql8.0

.

Le serveur de courriels Dovecot est mis à jour vers sa version 2.4. Cette version change de manière significative la configuration qui nécessite une adaptation. Il est maintenant possible de stopper une commande mail qui prend du temps proprement. Pour l'authentification, les fonctions de hashage SCRAM-SHA-1-PLUS

et SCRAM-SHA-256-PLUS

sont maintenant disponibles de même que les liaisons de canal TLS. La bibliothèque Lua permet d’interagir avec le client DNS et HTTP fourni par Dovecot.

Le serveur d'application Tomcat est mis à jour vers sa version 10.1. Cela signifie qu'il suit la spécification Jakarta EE 10 ce qui implique un nouvel espace de nom qui passe de javax.*

à jakarta.*

. À cause de ce changement, la compatibilité ascendante n'est pas garantie et une recompilation des applications web est requise. De même l'API interne de Tomcat a beaucoup changé subtilement ce qui rend la compatibilité binaire impossible à garantir et nécessite une revue pour ceux qui interagissent avec ces APIs. Par défaut les requêtes et réponses Web se font en UTF-8 tandis que les sessions ne sont pas conservées après un redémarrage du serveur. Les fichiers de logs ne seront créés que lorsque du contenu doit être écrit dedans. Enfin les paramètres HTTP en commun entre HTTP 2 et 1.1 seront placés au niveau du connecteur HTTP 1.1 pour permettre à la version 2 d'en hériter et limiter ainsi la duplication des paramètres.

Migration de l'utilitaire de journalisation lastlog

à lastlog2

. Le premier intérêt de cette migration est de corriger le bogue de l'an 2038 qui n'affecte pas ce dernier. Il peut également d'utiliser la base de données SQLite 3 pour le stockage. Il utilise aussi des composants PAM pour l'authentification et la sortie est compatible de manière ascendante également. De plus il est légèrement plus performant en augmentant la taille de stockage à partir du nombre d'utilisateurs et non du plus grand id utilisateur dans le lot. Le risque de problèmes de compatibilité devrait être faible. La migration vers le nouveau format sera effectuée automatiquement et des liens symboliques vers les noms des anciens binaires sont créés pour éviter de casser des scripts existants.

L'information PLATFORM_ID

dans os-release

est supprimée. Cette information a été ajoutée dans le cadre de la modularité, mais avec l'abandon de celle-ci cette ligne n'est plus nécessaire. Ceux qui se basent sur cette information sont invités à changer d'approche.

Développement

La chaine de compilation GNU évolue avec binutils 2.45 et glibc 2.42. Pour binutils, les informations sframe générées par l'assembleur sont maintenant conformes avec la spécification SFrame V2. L'assembleur gère les dernières évolutions des architectures RISC-V, LoongArch et AArch64. Il peut également utiliser les directives .errif

et .warnif

pour avoir un diagnostic contrôlé par l'utilisateur avec des critères qui sont évalués uniquement à la fin de l'assemblage.

Pour la bibliothèque C glibc, elle prend en charge de nouvelles fonctions mathématiques introduites dans C23 telles que ompoundn

, pown

, powr

, rootn

et rsqrt

. Elle propose aussi en avance les fonctions valeurs absolues non signées qui seront proposées par la prochaine norme C. Ajout également de la fonction pthread_gettid_np

pour connaître l'id d'un thread à partir d'une structure opaque pthread_t

. Le cache local dans malloc

gagne en performance pour les petites allocations mémoire et peut prendre en charge des blocs à mettre en cache de plus grande taille via l'option glibc.malloc.tcache_max

. Le manuel a été particulièrement enrichi pour être plus complet notamment pour les threads, terminaux, systèmes de fichiers, ressources et fonctions mathématiques.

L'éditeur de lien Gold du paquet binutils-gold

est considéré comme déprécié et sera supprimé dans une version future. Fedora Linux a déjà quatre éditeurs de liens : ld.bfd

, ld.gold

, lld

et mold

. Se débarrasser de l'un d'entre eux qui n'est plus maintenu par le projet GNU n'est pas un problème et doit améliorer à terme la maintenance.

Mise à jour de la chaine de compilation LLVM à sa version 21. Les cartes graphiques AMD voient une amélioration de la prise en charge de ROCm de même qu'un effort pour proposer une bibliothèque C pour GPU. Mais les architectures RISC-V ou cartes graphiques de Nvidia ont également quelques améliorations mineures de ce côté. Le compilateur C CLang optimise l’arithmétique des pointeurs avec le pointeur null et comme souvent le suivi des futurs standards C et C++ se poursuit. Le compilateur fait de meilleurs messages d'erreurs pour les erreurs de compilation.

Côté Fedora, les RPM de ce projet sont compilés avec un profil d'optimisation ce qui devrait significativement améliorer les performances du compilateur maison.

Le langage Python mue à sa version 3.14. Cette version propose des template strings pour étendre les capacités des f-string afin de plus facilement modifier la valeur de la chaîne de caractères à partir d'autres variables. L'évaluation différée des annotations permet de résoudre les annotations de type qui sont cycliques. De plus, un nouveau module concurrent.interpreters

fait son apparition pour permettre l'exécution d'interpréteurs Python dans des fils d'exécution uniques pouvant communiquer entre eux, ouvrant la voie à un vrai parallélisme sans renoncer, pour le moment, au fameux verrou principal nommé GIL. Le module compression accueille l'algorithme zstd qui est de plus en plus utilisé dans divers projets libres. Enfin les UUIDs 6, 7 et 8 font leur apparition, permettant de générer des UUIDs respectant différents critères, les deux premiers autorisent un tri temporel quand le dernier est un assemblage de 3 entiers fournis en paramètre. Il y a évidemment d'autres changements améliorant notamment des performances ou rendant les messages d'erreurs plus clairs.

Le langage Go passe à la version 1.25. Il est possible dans cette version d'activer le détecteur de fuites de mémoire à la compilation d'un programme par la commande go build -asan

, le rapport sera généré à la fermeture du programme. La commande go vet

détecte les appels de fonctions sync.WaitGroup.Add

mal placés de même que l'usage de fmt.Sprintf("%s:%d", host, port)

pour construire des adresses de connexions qui sont invalides en cas d'usage d'IPv6. Un nouveau ramasse-miettes expérimental fait son apparition, il doit réduire l'impact de ces opérations de 10-40% pour un programme standard qui fait beaucoup d'allocations et de désallocations de petits objets. En terme d'expérimentations, un nouveau module expérimental encoding/json/v2

fait son entrée en matière pour l'encodage et le décodage de JSON, qui doit être plus performant pour le décodage tout en supprimant certaines limitations. Pour les développeurs, un nouveau module runtime/trace.FlightRecorder

permet de facilement enregistrer la trace d'événements précis dans le programme dans le contexte de débogage tout en étant léger pour ne pas perturber l'exécution du logiciel par les traces complètes habituellement utilisées. Dans le contexte du débogage, le format DWARF5 pour les informations de débogage peut être exploité ce qui améliore la taille du binaire et le temps de l'édition des liens par ailleurs.

Le langage Perl fourni une réponse brillante avec sa version 5.42. L'accent a été mis pour améliorer les performances dans cette version. Mais en plus de cela, les méthodes peuvent être déclarées avec une visibilité réduite avec l'instruction my method

, ces méthodes peuvent être appelées grâce à l'opérateur ->&

. Deux nouveaux opérateurs expérimentaux all

et any

pour vérifier une condition sur l'ensemble, et respectivement aucun, des éléments d'une liste. Un pragma source::encoding

peut être apposé sur un fichier source pour détecter les erreurs d'encodage du dit fichier s'il ne correspond pas à celui mentionné. Une classe peut générer automatiquement un setter pour un de ses attributs via l'attribut :writer

qui fonctionne de manière analogue à :reader

pour le getter.

La boîte à outils Ruby on Rails démarrera voie 8.0. La gestion de certaines dépendances a été améliorée, en effet pour bénéficier de certaines fonctions telles que les Websockets, les jobs ou le cache il était nécessaire d'utiliser au choix MySQL, PostgreSQL ou Redis. Maintenant SQLite s'ajoute à la liste des possibilités et cela passe par de nouvelles couches d'abstractions Solid Cable, Solid Cache et Solid Queue. Fournir des fichiers statiques repose sur Propshaft

au lieu de Sprockets

, qui est bien plus simple en tirant profit des changements dans le monde Web opérés depuis 15 ans tels que les frameworks JavaScript ou la bonne gestion des petits fichiers avec le protocole HTTP/2 qui permettent de déléguer la gestion de ces problématiques aux frameworks JavaScript directement. Pour finir, elle propose un moyen simple de générer un template basique mais efficace pour la gestion de l'authentification d'une application, en exécutant bin/rails generate authentication

vous obtenez un modèle basique pour la session et l'utilisateur avec des contrôleurs pour la session et l'authentification elle même.

La machine virtuelle Java OpenJDK 25 est fournie. Parmi les changements, un effort a été consenti pour améliorer le mécanisme de l'Ahead-of-Time, la mise en cache de la JVM. Il devient en effet plus facile d'exécuter une application simple pour générer la liste des objets et modules nécessaires pour rendre les prochains lancement plus rapides et de même les profils générés pour accélérer l'application seront immédiatement exploités au démarrage pour également accélérer le lancement de l'application. Le système d'enregistrement des événements est étendu avec la possibilité d'enregistrer précisément le temps d'exécution d'une fonction et sa pile d'appels. Il est possible d'appeler d'autres fonctions ou opérations dans un constructeur avant qu'il n'appelle lui même un autre constructeur du même objet ou de sa classe parente ce qui peut simplifier le code en le rendant plus naturel.

L'utilitaire dans l'écosystème Java nommé Maven bénéficie de la version 4 en parallèle de la version 3. La version 4 est accessible via le paquet maven4

depuis les dépôts. Parmi les changements fondamentaux, il y a une séparation entre les besoins de compilation et d'utilisation du fichier pom.xml

qui mentionnait dans le détail les informations de compilation ou des dépendances ce qui était rarement utile pour les utilisateurs lors du déploiement. Maintenant le fichier destiné à être distribué ne mentionne plus ces informations superflues. Dans ce contexte, un nouveau type de paquet bom

est fourni pour générer une liste de composants nécessaires pour ce logiciel ce qui est un atout dans une optique de traçabilité et de suivi des failles de sécurité par exemple. Pour clarifier la différence entre les modules de Maven et ceux de Java, les modules sont renommés subprojects, d'ailleurs ces sous-projets peuvent déduire des informations du parent comme sa version par exemple ce qui rend inutile de le mentionner à nouveau. Les sous-projets peuvent aussi être découverts au sein du projet évitant le besoin de les déclarer explicitement et systématiquement.

Le compilateur pour le langage Haskell GHC a été mis à jour vers sa version 9.8 et son écosystème Stackage vers la version 23. Le langage fonctionnel dispose des ExtendedLiterals

pour préciser le type précis d'un entier défini tel quel dans le code, comme 123#Int8

pour un entier représenté uniquement sur 8 bits. Dans le bas niveau, un logiciel compilé avec -mfma

pour permettre l'usage des instructions, qui combinent multiplication et addition (telle que fmaddFloat# x y z

qui donne x * y + z

) si l'architecture matérielle les supporte ce qui peut améliorer les performances du programme. Il introduit des nouveaux pragmas WARNING

et DEPRECATED

à des fonctions d'un module pour signaler à un appelant de prêter attention à son utilisation, notamment en cas de suppression planifiée de la dite fonction.

Le langage de programmation fonctionnel Idris dispose d'une mise à jour majeure vers sa 2e version. La version 1 reposait sur Haskell mais il devenait de plus en plus difficile de le générer avec des versions récentes du compilateur GHC. La version 2 est implémentée en Scheme et repose sur la théorie des types quantifiés, ainsi une variable assignée à une quantité 0 est effacée, assignée à une quantité 1 elle est utilisée une seule et unique fois, etc. Ce changement de paradigme rend de nombreux programmes écrits pour Idris 1 incompatibles. De plus le Prelude du langage ne contient que les éléments strictement nécessaires dans la plupart des programmes non triviaux, le reste est relégué dans dans la bibliothèque base.

Le compilateur Free Pascal propose des paquets permettant la compilation croisée avec d'autres architectures. Ce changement facilite la compilation de programmes pour d'autres systèmes sans quitter sa Fedora Linux. Les paquets nécessaires sont de la forme fpc-cross-$CPU

et fpc-units-$CPU-$OS

où le premier fourni le compilateur en lui même à destination d'une architecture matérielle spécifique quand le second fourni des objets précompilés nécessaires la plupart du temps pour générer un programme pouvant s'exécuter sur le matériel et système considéré. Si vous souhaitez compiler pour du Windows x86 il faudra installer par conséquent les paquets fpc-cross-i386

et fpc-units-i386-win32

.

La bibliothèque d'Intel tbb

pour paralléliser certaines tâches passe à la version 2022.2.0. Les changements sont relativement mineurs mais à cause de la rupture de compatibilité de l'ABI, les logiciels s'en servant doivent être recompilés pour fonctionner avec cette nouvelle version.

La signature cryptographique des informations de débogage debuginfod est maintenant vérifiée automatiquement du côté du client. Les paquets RPM fournissaient la dite signature depuis Fedora Linux 39, le client comme serveur prenaient en charge la fonctionnalité, il ne manquait plus qu'une configuration adéquate pour permettre ce changement. Cela se fait en éditant le fichier de configuration /etc/debuginfod/elfutils.urls

ainsi :

ima:enforcing https://debuginfod.fedoraproject.org ima:ignore

Cela ne le fait que pour les informations de débogage provenant du projet Fedora, pour les autres il faudra activer cela manuellement de manière similaire.

En cas de signature invalide, les fichiers concernés seront rejetés et considérés comme non disponibles ce qui améliore la fiabilité et la sécurité du débogage.

La bibliothèque Rust async-std

est considérée comme dépréciée avant une suppression future. Il n'est en effet plus maintenu et il est recommandé de passer à la bibliothèque smol

à la place.

La bibliothèque Python python-async-timeout

est considéré comme dépréciée avant une suppression future. Cette fonctionnalité est en effet fournie dans la bibliothèque standard depuis Python 3.11 rendant cette bibliothèque obsolète. Cependant une trentaine de paquets s'en servent encore directement rendant sa suppression impossible à ce stade.

Le paquet python-nose

a été retiré. Déprécié par Fedora depuis plus de cinq ans et sans maintenant depuis plus longtemps, sa suppression devenait nécessaire avec l'impossibilité de s'en servir avec la dernière version de Python. Les paquets qui en avaient encore besoin ont été corrigés pour s'en passer.

Les paquets concernant d'anciennes versions de GTK pour Rust gtk3-rs

, gtk-rs-core

version 0.18, et gtk4-rs

ont été retirés. Ils étaient dépréciés depuis Fedora Linux 42 car non maintenus.

Projet Fedora

Koji utilise localement au sein du projet Fedora une instance de Red Hat Image Builder pour générer certaines images qui en dépendent. Cela concerne les images Fedora IoT et Minimal qui étaient construites via l'infrastructure de Red Hat, Koji ne servant que d'orchestrateur côté Fedora. Cela posait quelques soucis dont la possibilité d'intervenir en cas de problèmes mais aussi le fait qu'il était impossible de geler les changements de l'infrastructure aux moments adéquats pour l'élaboration des nouvelles images. Le paquet koji-image-builder

a été créé pour permettre d'exécuter cette machinerie localement au sein de l'infrastructure du projet Fedora. L'objectif est d'inclure cela dans Koji intégralement à terme quand ce sera suffisamment testé.

La génération de l'image Core OS repose sur le fichier de définition de conteneurs Containerfile

. Jusqu'ici l'image était conçue via RPM OStree avant d'être convertie en image OCI. L'objectif est donc de sauter l'étape RPM OStree en utilisant les conteneurs, l'image de référence est basée sur une Fedora Linux avec bootc

. Cela simplifie la procédure de génération de l'image et permet facilement à quiconque de reproduire ces étapes s'il le souhaite en utilisant podman

.

Arrêt de publication des mises à jour de Core OS sur le dépôt OSTree. Cela fait suite au changement introduit dans la version précédente de Fedora où l'utilisation des images OCI avait débuté et que les nœuds existants allaient progressivement migrer vers ce format. Désormais maintenir la publication de mises à jour OSTree n'a plus de sens et permet de réallouer les ressources humaines et matérielles.

Le système d'intégration continue de Fedora ne prend plus en charge le format Standard Test Interface. Il évoluait conjointement avec Test Management Tool qui a maintenant les mêmes capacités et continue d'évoluer. N'avoir plus qu'un format permet de simplifier la maintenance et l'outillage. Il y avait de plus en plus d'erreurs liées à l'usage de STI pour certains paquets incitant à faire la migration. Le format TMT a quelques avantages dont une meilleure organisation des tests et des environnements de tests. Les tests sont également reproductibles localement ce qui est important pour la résolution de problèmes. Grâce à l'intégration avec Packit il peut facilement exécuter les tests à partir des sources du logiciel si besoin ce qui est utile en cas de nouvelle version d'un paquet ou voir si un correctif spécifique à Fedora introduit des régressions.

Les nouveaux paquets recevront automatiquement une nouvelle configuration basée sur Packit pour permettre la gestion automatique de certaines tâches dans le cadre des nouvelles versions de Fedora. Cette étape est faite lors de la création du projet sur le service src.fedoraproject.org, un correctif fournit automatiquement cette configuration initiale. Cela peut être manuellement désactivé lors de la création si un mainteneur ne le souhaite pas. L'intérêt est de simplifier l'accueil de nouveaux empaqueteurs mais aussi d'automatiser certaines tâches par défaut. En cas de nouvelle version d'un composant, le paquet avec cette version sera automatiquement crée pour identifier les éventuels problèmes, et s'il n'y en a pas, permettre de créer automatiquement la nouvelle version du paquet et de la diffuser si l'empaqueteur accepte cette nouvelle version. Cela peut aider à gérer plus de paquets et à mieux les maintenir sur le long terme.

Les bibliothèques statiques fournies par les paquets RPM de Fedora conservent les informations de débogage pour permettre de comprendre l'origine des crashes des applications les exploitant. Cela est rendu possible par la mise à jour du composant debugedit

qui permet une telle opération sans trop de problèmes. En effet il peut maintenant collecter les fichiers sources d'une bibliothèque statique et réécrire les chemins vers le répertoire /usr/src/debug

comme attendu par les différents outils de débogage. Cependant les informations de débogage sont fournies dans les paquets RPM des binaires pour éviter la complexité de les inclure manuellement pour les développeurs qui en ont besoin dans leur logiciel. L'espace disque nécessaire pour ces informations est considéré comme relativement négligeable pour prendre cette décision. Pour les développeurs qui ne veulent pas des informations de débogage dans leur binaire peuvent exécuter la commande strip -g

après l'édition de liens.

Les macros dédiées pour générer les paquets Python reposant sur setup.py

sont dorénavant dépréciées. Cela concerne les macros %py3_build

, %py3_install

, et %py3_build_wheel

. En effet ces macros reposent sur ce script qui ne sera plus maintenu à partir d'octobre 2025 par le projet setuptools

. L'objectif est d'inciter et de migrer progressivement ces paquets vers la nouvelle méthode reposant sur les macros %pyproject_*

et exploitant le fichier de configuration pyproject.toml

. Environ 35% des paquets Python de Fedora sont donc concernés par cette migration à venir qui permettra de moderniser et d'unifier les conventions tout en suivant les bonnes pratiques de l'écosystème Python.

Les paquets Go sont compilés en utilisant par défaut les dépendances du projet compilé plutôt que d'utiliser systématiquement des dépendances basées sur des RPM gérés par le projet Fedora. En effet les binaires Go sont tous statiquement compilés donc l'objectif de maintenir des dépendances ne sert qu'à la construction des dits paquets. Cela concerne environ 1400 paquets pour générer 400 paquets avec un binaire Go. C'est beaucoup de travail de maintenance et cela limitait la possibilité d'inclure plus de paquets Go car il fallait empaqueter toutes les dépendances nécessaires au préalable. Cependant avec cette méthode il devient plus difficile de corriger les bogues ou problèmes de sécurité introduits par ces dépendances si Fedora ne les récupère pas. Et pour les cas où il est préférable d'avoir les dépendances gérées par le projet, cela sera un travail plus important pour un paquet donné car moins de dépendances seront déjà disponibles dans les dépôts. Le suivi des licences nécessite d'être adapté pour s'assurer qu'il n'y a pas de problèmes de compatibilité mais des outils existent déjà pour réduire cette problématique.

Les paquets NodeJs utiliseront un nouveau formalisme pour le chemin de leurs dépendances. En effet le répertoire %{_libdir}/node_modules

pointait vers un répertoire spécifique à une version de NodeJs comme %{_libdir}/node_modules20

pour la version 20 de NodeJs. Mais cette solution était plutôt pénible avec Fedora qui propose plusieurs versions de NodeJs en parallèle. L'objectif est de mettre en commun %{_libdir}/node_modules

pour les modules où c'est possible, et pour ceux qui ont par exemple un binaire WASM, ils seront affectés dans un répertoire dépendant de la version. Cela simplifie la procédure d'empaquetage de ces composants tout en réduisant les doublons et en évitant les incompatibilités. Cela aide également à mettre en évidence les dépendances binaires cachées pour les reconstruire au sein du projet Fedora, ou les supprimer si cela n'est pas possible, à terme.

Les macros CMake ne fourniront plus de variables d'installation non standards. Cela concerne les variables -DINCLUDE_INSTALL_DIR

, -DLIB_INSTALL_DIR

, -DSYSCONF_INSTALL_DIR

, -DSHARE_INSTALL_PREFIX

et -DLIB_SUFFIX

. Cela ajoute de la confusion notamment parce que la signification de certaines variables n'est pas transparente comme INCLUDE_INSTALL_DIR

qui pourrait attendre des chemins relatifs ou absolus. CMake 3.0 a standardisé beaucoup de choses dans GNUInstallDirs

qui sont massivement utilisés depuis. Cela permet de mieux s'intégrer dans l'écosystème de ces projets et limite le risque d'erreurs lors de la construction des paquets.

L'assembleur YASM est considéré comme déprécié pour utiliser NASM à la place. Il n'est en effet plus maintenu même si encore utilisé dans quelques paquets tel que Firefox. D'autres distributions ont déjà sauté le pas.

Ajout des nouveaux macros RPM _pkg_extra_***flags

pour permettre à chaque paquet d'ajouter des nouvelles options à la compilation à la liste par défaut fournie par le projet Fedora. L'intérêt est d'avoir un moyen standard d'étendre les options de compilation d'un paquet plutôt que chacun les édite à sa sauce et cela permet dans le même temps de voir facilement quels paquets et quelles options sont personnalisés.

Certaines limitations de l'outil gpgverify

utilisé par les mainteneurs de paquets sont corrigées permettant de supprimer des méthodes de contournement associés. En effet l'outil est utilisé pour s'assurer que les sources pour construire le paquet sont bien celles souhaitées. Par exemple il était incapable de lire plusieurs clés GPG fournis dans un fichier dédié comme nginx pouvait le faire. Il était également incapable de gérer automatiquement la signature des sommes de contrôle des archives, il exigeait que l'archive des sources elle même soit signée ce qui n'était pas toujours le cas. Maintenant il prendre également en compte les clés au format keybox

. Le tout améliore la maintenance des paquets concernés mais évite aussi la possibilité de contourner la sécurité dans certains cas.

La communauté francophone

L'association

Borsalinux-fr est l'association qui gère la promotion de Fedora dans l'espace francophone. Nous constatons depuis quelques années une baisse progressive des membres à jour de cotisation et de volontaires pour prendre en main les activités dévolues à l'association.

Nous lançons donc un appel à nous rejoindre afin de nous aider.

L'association est en effet propriétaire du site officiel de la communauté francophone de Fedora, organise des évènements promotionnels comme les Rencontres Fedora régulièrement et participe à l'ensemble des évènements majeurs concernant le libre à travers la France principalement.

Si vous aimez Fedora, et que vous souhaitez que notre action perdure, vous pouvez :

- Adhérer à l'association : les cotisations nous aident à produire des goodies, à nous déplacer pour les évènements, à payer le matériel ;

- Participer sur le forum, les listes de diffusion, à la réfection de la documentation, représenter l'association sur différents évènements francophones ;

- Concevoir des goodies ;

- Organiser des évènements type Rencontres Fedora dans votre ville.

Nous serions ravis de vous accueillir et de vous aider dans vos démarches. Toute contribution, même minime, est appréciée.

Si vous souhaitez avoir un aperçu de notre activité, vous pouvez participer à nos réunions mensuelles chaque premier lundi soir du mois à 20h30 (heure de Paris). Pour plus de convivialité, nous l'avons mis en place en visioconférence sur Jitsi.

La documentation

Depuis juin 2017, un grand travail de nettoyage a été entrepris sur la documentation francophone de Fedora, pour rattraper les 5 années de retard accumulées sur le sujet.

Le moins que l'on puisse dire, c'est que le travail abattu est important : près de 90 articles corrigés et remis au goût du jour.

Un grand merci à Charles-Antoine Couret, Nicolas Berrehouc, Édouard Duliège, Sylvain Réault et les autres contributeurs et relecteurs pour leurs contributions.

La synchronisation du travail se passe sur le forum.

Si vous avez des idées d'articles ou de corrections à effectuer, que vous avez une compétence technique à retransmettre, n'hésitez pas à participer.

Comment se procurer Fedora Linux 43 ?

Si vous avez déjà Fedora Linux 42 ou 41 sur votre machine, vous pouvez faire une mise à niveau vers Fedora Linux 43. Cela consiste en une grosse mise à jour, vos applications et données sont préservées.

Autrement, pas de panique, vous pouvez télécharger Fedora Linux avant de procéder à son installation. La procédure ne prend que quelques minutes.

Nous vous recommandons dans les deux cas de procéder à une sauvegarde de vos données au préalable.

De plus, pour éviter les mauvaises surprises, nous vous recommandons aussi de lire au préalable les bogues importants connus à ce jour pour Fedora Linux 43.

comments? additions? reactions?

As always, comment on mastodon: https://fosstodon.org/@nirik/115713891385384001